POS-Tagging and Syntactic Parsing with R

Martin Schweinberger

31 May, 2023

Introduction

This tutorial introduces part-of-speech tagging and syntactic parsing using R. This tutorial is aimed at beginners and intermediate users of R with the aim of showcasing how to annotate textual data with part-of-speech (pos) tags and how to syntactically parse textual data using R. The aim is not to provide a fully-fledged analysis but rather to show and exemplify selected useful methods associated with pos-tagging and syntactic parsing. Another highly recommendable tutorial on part-of-speech tagging in R with UDPipe is available here and another tutorial on pos-tagging and syntactic parsing by Andreas Niekler and Gregor Wiedemann can be found here (see Wiedemann and Niekler 2017).

To be able to follow this tutorial, we suggest you check out and

familiarize yourself with the content of the following R

Basics tutorials:

- Getting started with R

- Loading, saving, and generating data in R

- String Processing in R

- Regular Expressions in R

Click here1 to

download the entire R Notebook for this

tutorial.

Click

here

to open an interactive Jupyter notebook that allows you to execute,

change, and edit the code as well as to upload your own data.

Part-Of-Speech Tagging

Many analyses of language data require that we distinguish different parts of speech. In order to determine the word class of a certain word, we use a procedure which is called part-of-speech tagging (commonly referred to as pos-, pos-, or PoS-tagging). pos-tagging is a common procedure when working with natural language data. Despite being used quite frequently, it is a rather complex issue that requires the application of statistical methods that are quite advanced. In the following, we will explore different options for pos-tagging and syntactic parsing.

Parts-of-speech, or word categories, refer to the grammatical nature or category of a lexical item, e.g. in the sentence Jane likes the girl each lexical item can be classified according to whether it belongs to the group of determiners, verbs, nouns, etc. pos-tagging refers to a (computation) process in which information is added to existing text. This process is also called annotation. Annotation can be very different depending on the task at hand. The most common type of annotation when it comes to language data is part-of-speech tagging where the word class is determined for each word in a text and the word class is then added to the word as a tag. However, there are many different ways to tag or annotate texts.

Pos–tagging assigns part-of-speech tags to character strings (these represent mostly words, of course, but also encompass punctuation marks and other elements). This means that pos–tagging is one specific type of annotation, i.e. adding information to data (either by directly adding information to the data itself or by storing information in e.g. a list which is linked to the data). It is important to note that annotation encompasses various types of information such as pauses, overlap, etc. pos–tagging is just one of these many ways in which corpus data can be enriched. Sentiment Analysis, for instance, also annotates texts or words with respect to its or their emotional value or polarity.

Annotation is required in many machine-learning contexts because annotated texts are commonly used as training sets on which machine learning or deep learning models are trained that then predict, for unknown words or texts, what values they would most likely be assigned if the annotation were done manually. Also, it should be mentioned that by many online services offer pos-tagging (e.g. here or here.

When pos–tagged, the example sentence could look like the example below.

- Jane/NNP likes/VBZ the/DT girl/NN

In the example above, NNP stands for proper noun

(singular), VBZ stands for 3rd person singular present

tense verb, DT for determiner, and NN for

noun(singular or mass). The pos-tags used by the

openNLPpackage are the Penn

English Treebank pos-tags. A more elaborate description of the tags

can be found here which is summarised below:

Tag | Description | Examples |

|---|---|---|

CC | Coordinating conjunction | and, or, but |

CD | Cardinal number | one, two, three |

DT | Determiner | a, the |

EX | Existential there | There/EX was a party in progress |

FW | Foreign word | persona/FW non/FW grata/FW |

IN | Preposition or subordinating con | uh, well, yes |

JJ | Adjective | good, bad, ugly |

JJR | Adjective, comparative | better, nicer |

JJS | Adjective, superlative | best, nicest |

LS | List item marker | a., b., 1., 2. |

MD | Modal | can, would, will |

NN | Noun, singular or mass | tree, chair |

NNS | Noun, plural | trees, chairs |

NNP | Proper noun, singular | John, Paul, CIA |

NNPS | Proper noun, plural | Johns, Pauls, CIAs |

PDT | Predeterminer | all/PDT this marble, many/PDT a soul |

POS | Possessive ending | John/NNP 's/POS, the parentss/NNP '/POS distress |

PRP | Personal pronoun | I, you, he |

PRP$ | Possessive pronoun | mine, yours |

RB | Adverb | evry, enough, not |

RBR | Adverb, comparative | later |

RBS | Adverb, superlative | latest |

RP | Particle | RP |

SYM | Symbol | CO2 |

TO | to | to |

UH | Interjection | uhm, uh |

VB | Verb, base form | go, walk |

VBD | Verb, past tense | walked, saw |

VBG | Verb, gerund or present particip | walking, seeing |

VBN | Verb, past participle | walked, thought |

VBP | Verb, non-3rd person singular pr | walk, think |

VBZ | Verb, 3rd person singular presen | walks, thinks |

WDT | Wh-determiner | which, that |

WP | Wh-pronoun | what, who, whom (wh-pronoun) |

WP$ | Possessive wh-pronoun | whose, who (wh-words) |

WRB | Wh-adverb | how, where, why (wh-adverb) |

Assigning these pos-tags to words appears to be rather straight forward. However, pos-tagging is quite complex and there are various ways by which a computer can be trained to assign pos-tags. For example, one could use orthographic or morphological information to devise rules such as. . .

If a word ends in ment, assign the pos-tag

NN(for common noun)If a word does not occur at the beginning of a sentence but is capitalized, assign the pos-tag

NNP(for proper noun)

Using such rules has the disadvantage that pos-tags can only be assigned to a relatively small number of words as most words will be ambiguous – think of the similarity of the English plural (-(e)s) and the English 3rd person, present tense indicative morpheme (-(e)s), for instance, which are orthographically identical.Another option would be to use a dictionary in which each word is as-signed a certain pos-tag and a program could assign the pos-tag if the word occurs in a given text. This procedure has the disadvantage that most words belong to more than one word class and pos-tagging would thus have to rely on additional information.The problem of words that belong to more than one word class can partly be remedied by including contextual information such as. .

- If the previous word is a determiner and the following word is a

common noun, assign the pos-tag

JJ(for a common adjective)

This procedure works quite well but there are still better options.The best way to pos-tag a text is to create a manually annotated training set which resembles the language variety at hand. Based on the frequency of the association between a given word and the pos-tags it is assigned in the training data, it is possible to tag a word with the pos-tag that is most often assigned to the given word in the training data.All of the above methods can and should be optimized by combining them and additionally including pos–n–grams, i.e. determining a pos-tag of an unknown word based on which sequence of pos-tags is most similar to the sequence at hand and also most common in the training data.This introduction is extremely superficial and only intends to scratch some of the basic procedures that pos-tagging relies on. The interested reader is referred to introductions on machine learning and pos-tagging such as e.g.https://class.coursera.org/nlp/lecture/149.

There are several different R packages that assist with pos-tagging

texts (see Kumar and Paul 2016). In this tutorial,

we will use the udpipe (Wijffels 2021). The

udpipe package is really great as it is easy to use, covers

a wide range of languages, is very flexible, and very accurate.

Preparation and session set up

This tutorial is based on R. If you have not installed R or are new to it, you will find an introduction to and more information how to use R here. For this tutorials, we need to install certain packages from an R library so that the scripts shown below are executed without errors. Before turning to the code below, please install the packages by running the code below this paragraph. If you have already installed the packages mentioned below, then you can skip ahead ignore this section. To install the necessary packages, simply run the following code - it may take some time (between 1 and 5 minutes to install all of the libraries so you do not need to worry if it takes some time).

# install packages

install.packages("dplyr")

install.packages("stringr")

install.packages("udpipe")

install.packages("flextable")

install.packages("here")

# install klippy for copy-to-clipboard button in code chunks

install.packages("remotes")

remotes::install_github("rlesur/klippy")Now that we have installed the packages, we activate them as shown below.

# load packages

library(dplyr)

library(stringr)

library(udpipe)

library(flextable)

# activate klippy for copy-to-clipboard button

klippy::klippy()Once you have installed R and RStudio and initiated the session by executing the code shown above, you are good to go.

POS-Tagging with UDPipe

UDPipe was developed at the Charles University in Prague and the

udpipe R package (Wijffels 2021) is an

extremely interesting and really fantastic package as it provides a very

easy and handy way for language-agnostic tokenization, pos-tagging,

lemmatization and dependency parsing of raw text in R. It is

particularly handy because it addresses and remedies major shortcomings

that previous methods for pos-tagging had, namely

- it offers a wide range of language models (64 languages at this point)

- it does not rely on external software (like, e.g., TreeTagger, that had to be installed separately and could be challenging when using different operating systems)

- it is really easy to implement as one only need to install and load

the

udpipepackage and download and activate the language model one is interested in - it allows to train and tune one’s own models rather easily

The available pre-trained language models in UDPipe are:

Languages | Models |

|---|---|

Afrikaans | afrikaans-afribooms |

Ancient Greek | ancient_greek-perseus, ancient_greek-proiel |

Arabic | arabic-padt |

Armenian | armenian-armtdp |

Basque | basque-bdt |

Belarusian | belarusian-hse |

bulgarian-btb | bulgarian-btb |

Buryat | buryat-bdt |

Catalan | catalan-ancora |

Chinese | chinese-gsd, chinese-gsdsimp, classical_chinese-kyoto |

Coptic | coptic-scriptorium |

Croatian | croatian-set |

Czech | czech-cac, czech-cltt, czech-fictree, czech-pdt |

Danish | danish-ddt |

Dutch | dutch-alpino, dutch-lassysmall |

English | english-ewt, english-gum, english-lines, english-partut |

Estonian | estonian-edt, estonian-ewt |

Finnish | finnish-ftb, finnish-tdt |

French | french-gsd, french-partut, french-sequoia, french-spoken |

Galician | galician-ctg, galician-treegal |

German | german-gsd, german-hdt |

Gothic | gothic-proiel |

Greek | greek-gdt |

Hebrew | hebrew-htb |

Hindi | hindi-hdtb |

Hungarian | hungarian-szeged |

Indonesian | indonesian-gsd |

Irish Gaelic | irish-idt |

Italian | italian-isdt, italian-partut, italian-postwita, italian-twittiro, italian-vit |

Japanese | japanese-gsd |

Kazakh | kazakh-ktb |

Korean | korean-gsd, korean-kaist |

Kurmanji | kurmanji-mg |

Latin | latin-ittb, latin-perseus, latin-proiel |

Latvian | latvian-lvtb |

Lithuanian | lithuanian-alksnis, lithuanian-hse |

Maltese | maltese-mudt |

Marathi | marathi-ufal |

North Sami | north_sami-giella |

Norwegian | norwegian-bokmaal, norwegian-nynorsk, norwegian-nynorsklia |

Old Church Slavonic | old_church_slavonic-proiel |

Old French | old_french-srcmf |

Old Russian | old_russian-torot |

Persian | persian-seraji |

Polish | polish-lfg, polish-pdb, polish-sz |

Portugese | portuguese-bosque, portuguese-br, portuguese-gsd |

Romanian | romanian-nonstandard, romanian-rrt |

Russian | russian-gsd, russian-syntagrus, russian-taiga |

Sanskrit | sanskrit-ufal |

Scottish Gaelic | scottish_gaelic-arcosg |

Serbian | serbian-set |

Slovak | slovak-snk |

Slovenian | slovenian-ssj, slovenian-sst |

Spanish | spanish-ancora, spanish-gsd |

Swedish | swedish-lines, swedish-talbanken |

Tamil | tamil-ttb |

Telugu | telugu-mtg |

Turkish | turkish-imst |

Ukrainian | ukrainian-iu |

Upper Sorbia | upper_sorbian-ufal |

Urdu | urdu-udtb |

Uyghur | uyghur-udt |

Vietnamese | vietnamese-vtb |

Wolof | wolof-wtb |

The udpipe R package also allows you to easily train your own models,

based on data in CONLL-U format, so that you can use these for your own

commercial or non-commercial purposes. This is described in the other

vignette of this package which you can view by the command

vignette("udpipe-train", package = "udpipe")

To download any of these models, we can use the

udpipe_download_model function. For example, to download

the english-ewt model, we would use the call:

m_eng <- udpipe::udpipe_download_model(language = "english-ewt").

We start by loading a text

# load text

text <- readLines("https://slcladal.github.io/data/testcorpus/linguistics06.txt", skipNul = T)

# clean data

text <- text %>%

str_squish() Now that we have a text that we can work with, we will download a pre-trained language model.

# download language model

m_eng <- udpipe::udpipe_download_model(language = "english-ewt")If you have downloaded a model once, you can also load the model directly from the place where you stored it on your computer. In my case, I have stored the model in a folder called udpipemodels

# load language model from your computer after you have downloaded it once

m_eng <- udpipe_load_model(file = here::here("udpipemodels", "english-ewt-ud-2.5-191206.udpipe"))We can now use the model to annotate out text.

# tokenise, tag, dependency parsing

text_anndf <- udpipe::udpipe_annotate(m_eng, x = text) %>%

as.data.frame() %>%

dplyr::select(-sentence)

# inspect

head(text_anndf, 10)## doc_id paragraph_id sentence_id token_id token lemma upos xpos

## 1 doc1 1 1 1 Linguistics Linguistic NOUN NNS

## 2 doc1 1 1 2 also also ADV RB

## 3 doc1 1 1 3 deals deal NOUN NNS

## 4 doc1 1 1 4 with with ADP IN

## 5 doc1 1 1 5 the the DET DT

## 6 doc1 1 1 6 social social ADJ JJ

## 7 doc1 1 1 7 , , PUNCT ,

## 8 doc1 1 1 8 cultural cultural ADJ JJ

## 9 doc1 1 1 9 , , PUNCT ,

## 10 doc1 1 1 10 historical historical ADJ JJ

## feats head_token_id dep_rel deps misc

## 1 Number=Plur 3 compound <NA> <NA>

## 2 <NA> 3 advmod <NA> <NA>

## 3 Number=Plur 0 root <NA> <NA>

## 4 <NA> 13 case <NA> <NA>

## 5 Definite=Def|PronType=Art 13 det <NA> <NA>

## 6 Degree=Pos 13 amod <NA> SpaceAfter=No

## 7 <NA> 8 punct <NA> <NA>

## 8 Degree=Pos 6 conj <NA> SpaceAfter=No

## 9 <NA> 10 punct <NA> <NA>

## 10 Degree=Pos 6 conj <NA> <NA>It can be useful to extract only the words and their pos-tags and convert them back into a text format (rather than a tabular format).

tagged_text <- paste(text_anndf$token, "/", text_anndf$xpos, collapse = " ", sep = "")

# inspect tagged text

tagged_text## [1] "Linguistics/NNS also/RB deals/NNS with/IN the/DT social/JJ ,/, cultural/JJ ,/, historical/JJ and/CC political/JJ factors/NNS that/WDT influence/VBP language/NN ,/, through/IN which/WDT linguistic/NN and/CC language/NN -/HYPH based/VBN context/NN is/VBZ often/RB determined/JJ ./. Research/VB on/IN language/NN through/IN the/DT sub-branches/NNS of/IN historical/JJ and/CC evolutionary/JJ linguistics/NNS also/RB focus/RB on/IN how/WRB languages/NNS change/VBP and/CC grow/VBP ,/, particularly/RB over/IN an/DT extended/JJ period/NN of/IN time/NN ./. Language/NN documentation/NN combines/VBZ anthropological/JJ inquiry/NN (/-LRB- into/IN the/DT history/NN and/CC culture/NN of/IN language/NN )/-RRB- with/IN linguistic/JJ inquiry/NN ,/, in/IN order/NN to/TO describe/VB languages/NNS and/CC their/PRP$ grammars/NNS ./. Lexicography/NNP involves/VBZ the/DT documentation/NN of/IN words/NNS that/WDT form/VBP a/DT vocabulary/NN ./. Such/PDT a/DT documentation/NN of/IN a/DT linguistic/JJ vocabulary/NN from/IN a/DT particular/JJ language/NN is/VBZ usually/RB compiled/VBN in/IN a/DT dictionary/NN ./. Computational/JJ linguistics/NNS is/VBZ concerned/JJ with/IN the/DT statistical/NN or/CC rule/NN -/HYPH based/VBN modeling/NN of/IN natural/JJ language/NN from/IN a/DT computational/JJ perspective/NN ./. Specific/JJ knowledge/NN of/IN language/NN is/VBZ applied/VBN by/IN speakers/NNS during/IN the/DT act/NN of/IN translation/NN and/CC interpretation/NN ,/, as/RB well/RB as/IN in/IN language/NN education/NN �/$ the/DT teaching/NN of/IN a/DT second/JJ or/CC foreign/JJ language/NN ./. Policy/NN makers/NNS work/VBP with/IN governments/NNS to/TO implement/VB new/JJ plans/NNS in/IN education/NN and/CC teaching/NN which/WDT are/VBP based/VBN on/IN linguistic/JJ research/NN ./."POS-Tagging non-English texts

We can apply the same method for annotating, e.g. adding pos-tags, to

other languages. For this, we could train our own model, or, we can use

one of the many pre-trained language models that udpipe

provides.

Let us explore how to do this by using example texts from different languages, here from German and Spanish (but we could also annotate texts from any of the wide variety of languages for which UDPipe provides pre-trained models.

We begin by loading a German and a Dutch text.

# load texts

gertext <- readLines("https://slcladal.github.io/data/german.txt")

duttext <- readLines("https://slcladal.github.io/data/dutch.txt")

# inspect texts

gertext; duttext## [1] "Sprachwissenschaft untersucht in verschiedenen Herangehensweisen die menschliche Sprache."## [1] "Taalkunde, ook wel taalwetenschap of linguïstiek, is de wetenschappelijke studie van de natuurlijke talen."Next, we install the pre-trained language models.

# download language model

m_ger <- udpipe::udpipe_download_model(language = "german-gsd")

m_dut <- udpipe::udpipe_download_model(language = "dutch-alpino")Or we load them from our machine (if we have downloaded and saved them before).

# load language model from your computer after you have downloaded it once

m_ger <- udpipe::udpipe_load_model(file = here::here("udpipemodels", "german-gsd-ud-2.5-191206.udpipe"))

m_dut <- udpipe::udpipe_load_model(file = here::here("udpipemodels", "dutch-alpino-ud-2.5-191206.udpipe"))Now, pos-tag the German text.

# tokenise, tag, dependency parsing of german text

ger_pos <- udpipe::udpipe_annotate(m_ger, x = gertext) %>%

as.data.frame() %>%

dplyr::summarise(postxt = paste(token, "/", xpos, collapse = " ", sep = "")) %>%

dplyr::pull(unique(postxt))

# inspect

ger_pos## [1] "Sprachwissenschaft/NN untersucht/VVFIN in/APPR verschiedenen/ADJA Herangehensweisen/NN die/ART menschliche/NN Sprache/NN ./$."And finally, we also pos-tag the Dutch text.

# tokenise, tag, dependency parsing of german text

nl_pos <- udpipe::udpipe_annotate(m_dut, x = duttext) %>%

as.data.frame() %>%

dplyr::summarise(postxt = paste(token, "/", xpos, collapse = " ", sep = "")) %>%

dplyr::pull(unique(postxt))

# inspect

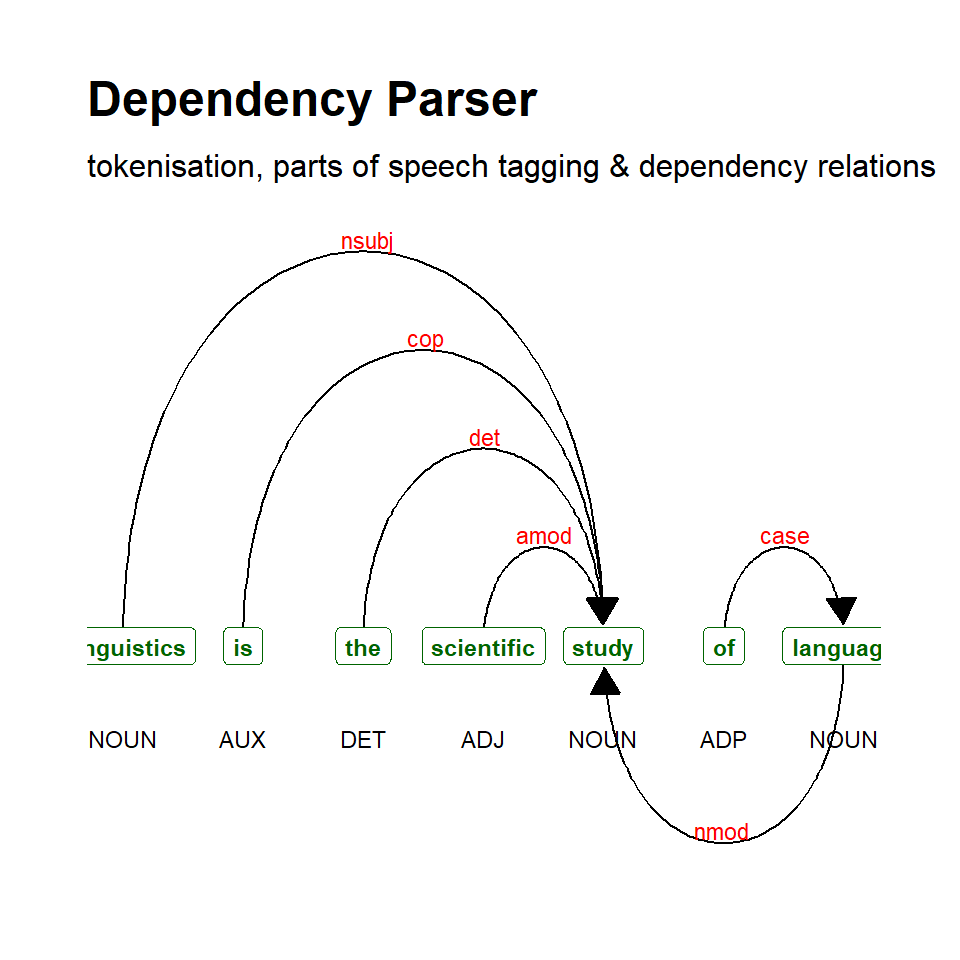

nl_pos## [1] "Taalkunde/N|soort|ev|basis|zijd|stan ,/LET ook/BW wel/BW taalwetenschap/N|soort|ev|basis|zijd|stan of/VG|neven linguïstiek/N|soort|ev|basis|zijd|stan ,/LET is/WW|pv|tgw|ev de/LID|bep|stan|rest wetenschappelijke/ADJ|prenom|basis|met-e|stan studie/N|soort|ev|basis|zijd|stan van/VZ|init de/LID|bep|stan|rest natuurlijke/ADJ|prenom|basis|met-e|stan talen/N|soort|mv|basis ./LET"Dependency Parsing Using UDPipe

In addition to pos-tagging, we can also generate plots showing the

syntactic dependencies of the different constituents of a sentence. For

this, we generate an object that contains a sentence (in this case, the

sentence Linguistics is the scientific study of language), and

we then plot (or visualize) the dependencies using the

textplot_dependencyparser function.

# parse text

sent <- udpipe::udpipe_annotate(m_eng, x = "Linguistics is the scientific study of language") %>%

as.data.frame()

# inspect

head(sent)## doc_id paragraph_id sentence_id

## 1 doc1 1 1

## 2 doc1 1 1

## 3 doc1 1 1

## 4 doc1 1 1

## 5 doc1 1 1

## 6 doc1 1 1

## sentence token_id token

## 1 Linguistics is the scientific study of language 1 Linguistics

## 2 Linguistics is the scientific study of language 2 is

## 3 Linguistics is the scientific study of language 3 the

## 4 Linguistics is the scientific study of language 4 scientific

## 5 Linguistics is the scientific study of language 5 study

## 6 Linguistics is the scientific study of language 6 of

## lemma upos xpos feats

## 1 Linguistic NOUN NNS Number=Plur

## 2 be AUX VBZ Mood=Ind|Number=Sing|Person=3|Tense=Pres|VerbForm=Fin

## 3 the DET DT Definite=Def|PronType=Art

## 4 scientific ADJ JJ Degree=Pos

## 5 study NOUN NN Number=Sing

## 6 of ADP IN <NA>

## head_token_id dep_rel deps misc

## 1 5 nsubj <NA> <NA>

## 2 5 cop <NA> <NA>

## 3 5 det <NA> <NA>

## 4 5 amod <NA> <NA>

## 5 0 root <NA> <NA>

## 6 7 case <NA> <NA>We now generate the plot.

# generate dependency plot

dplot <- textplot::textplot_dependencyparser(sent, size = 3)

# show plot

dplot

Citation & Session Info

Schweinberger, Martin. 2023. POS-Tagging and Syntactic Parsing with R. Brisbane: The University of Queensland. url: https://ladal.edu.au/pos.html (Version 2023.05.31).

@manual{schweinberger20232pos,

author = {Schweinberger, Martin},

title = {pos-Tagging and Syntactic Parsing with R},

note = {https://slcladal.github.io/tagging.html},

year = {2023},

organization = "The University of Queensland, School of Languages and Cultures},

address = {Brisbane},

edition = {2023.05.31}

}sessionInfo()## R version 4.2.2 (2022-10-31 ucrt)

## Platform: x86_64-w64-mingw32/x64 (64-bit)

## Running under: Windows 10 x64 (build 22621)

##

## Matrix products: default

##

## locale:

## [1] LC_COLLATE=English_Australia.utf8 LC_CTYPE=English_Australia.utf8

## [3] LC_MONETARY=English_Australia.utf8 LC_NUMERIC=C

## [5] LC_TIME=English_Australia.utf8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] udpipe_0.8.11 stringr_1.5.0 dplyr_1.1.2 flextable_0.9.1

##

## loaded via a namespace (and not attached):

## [1] fontquiver_0.2.1 rprojroot_2.0.3 tools_4.2.2

## [4] bslib_0.4.2 utf8_1.2.3 R6_2.5.1

## [7] colorspace_2.1-0 withr_2.5.0 tidyselect_1.2.0

## [10] gridExtra_2.3 curl_5.0.0 compiler_4.2.2

## [13] textplot_0.2.2 textshaping_0.3.6 cli_3.6.1

## [16] xml2_1.3.4 officer_0.6.2 fontBitstreamVera_0.1.1

## [19] labeling_0.4.2 sass_0.4.6 scales_1.2.1

## [22] askpass_1.1 systemfonts_1.0.4 digest_0.6.31

## [25] rmarkdown_2.21 gfonts_0.2.0 pkgconfig_2.0.3

## [28] htmltools_0.5.5 fastmap_1.1.1 highr_0.10

## [31] rlang_1.1.1 rstudioapi_0.14 httpcode_0.3.0

## [34] shiny_1.7.4 jquerylib_0.1.4 generics_0.1.3

## [37] farver_2.1.1 jsonlite_1.8.4 zip_2.3.0

## [40] magrittr_2.0.3 Matrix_1.5-4.1 Rcpp_1.0.10

## [43] munsell_0.5.0 fansi_1.0.4 gdtools_0.3.3

## [46] viridis_0.6.3 lifecycle_1.0.3 stringi_1.7.12

## [49] yaml_2.3.7 ggraph_2.1.0 MASS_7.3-60

## [52] grid_4.2.2 promises_1.2.0.1 ggrepel_0.9.3

## [55] crayon_1.5.2 lattice_0.21-8 graphlayouts_1.0.0

## [58] knitr_1.43 klippy_0.0.0.9500 pillar_1.9.0

## [61] igraph_1.4.3 uuid_1.1-0 crul_1.4.0

## [64] glue_1.6.2 evaluate_0.21 fontLiberation_0.1.0

## [67] data.table_1.14.8 vctrs_0.6.2 tweenr_2.0.2

## [70] httpuv_1.6.11 gtable_0.3.3 openssl_2.0.6

## [73] purrr_1.0.1 polyclip_1.10-4 tidyr_1.3.0

## [76] assertthat_0.2.1 cachem_1.0.8 ggplot2_3.4.2

## [79] xfun_0.39 ggforce_0.4.1 mime_0.12

## [82] xtable_1.8-4 tidygraph_1.2.3 later_1.3.1

## [85] viridisLite_0.4.2 ragg_1.2.5 tibble_3.2.1

## [88] ellipsis_0.3.2 here_1.0.1References

If you want to render the R Notebook on your machine, i.e. knitting the document to html or a pdf, you need to make sure that you have R and RStudio installed and you also need to download the bibliography file and store it in the same folder where you store the Rmd file.↩︎